Description:

Long story short: I'm not satisfied with current technology level, but the most annoying thing for me right now - how container runtimes work. I have to deal with Docker images and deliver them to all hosts where they are used. It's a heavy management burden, but it's an even heavier burden in sense of such IO operations as network and storage data movements. Why do I need to move the whole set of OCI layers if an app which resides inside uses only a small subset of files inside these layers ?

I know what you have thought: "just put only things you really need in this docker image, stupid". And you are wrong for many reasons. It's quite an easy thing to put single binary (and all dependencies) inside docker image and be a happy person. But the world is a cruel place and forces you to put many/all indirectly used binaries/libraries, scripts, data in different formats, machine learning models etc. In additional it brings a myriad of docker images and management burden for keeping them in sync/holistic state.

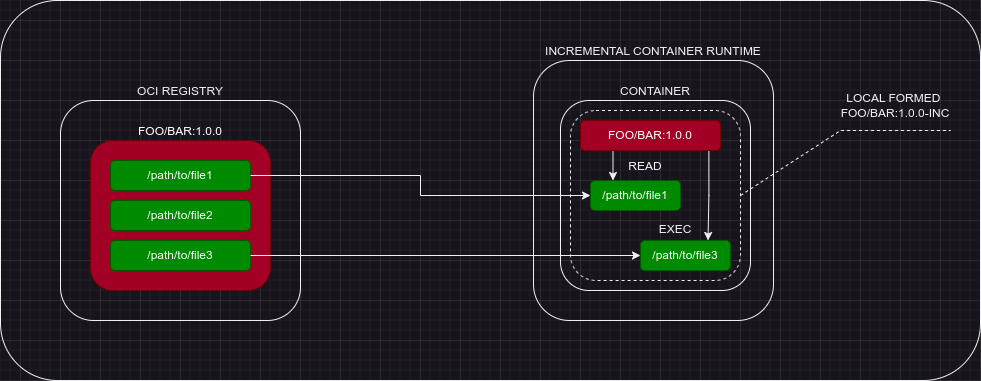

Once again, you have to bear a fully packed suitcase, even if you need only toothbrush and socks.Solution to such problems could be an incremental container runtime, when only needed files are fetched from registry image and this subset of files is forming docker image on an end host: